What Is the EU's Artificial Intelligence Act - and Why Do You Need to Get Ready Now?

- March 11, 2024

Did you know that 64% of business owners see AI as having the potential to strengthen customer relationships? And that over 60% of business owners believe AI to boost productivity?

But did you also know that 70% of consumers are either very concerned or somewhat concerned about businesses’ use of AI tools?

The mix of potential and concerns is why organizations need to use AI responsibly and transparently. And it’s why we must regulate their use of Artificial Intelligence AI systems and general-purpose AI models.

It’s also your reason for diving into this blog post since we answer all key questions – including the ‘big’ why:

How does the AI Act differ between different types of AI?

Overall, the AI Act differs between AI Systems, General-Purpose AI model (GPAI) and GPAI systems.

In the AI Act, an AI System is defined as:

“A machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

An example of an AI-System would be a system for self-driving cars based on machine learning or a machine learning based system that can predict diagnoses.

Conversely, a GPAl is defined as:

“An AI model, including when trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable to competently perform a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications. This does not cover AI models that are used before release on the market for research, development and prototyping activities.”

Examples of a GPAI are ChatGPT or Copilot.

A GPAI system is an AI system that is based on a general-purpose AI model. It has the capability to serve a variety of purposes, both for direct use as well as for integration into other AI systems.

What are the different risk levels within AI?

The AI Act distinguishes between 4 levels of AI systems based on the risk they pose. The 4 levels are:

- Prohibited AI

- High-risk AI

- Limited risk AI

- Minimal risk

Who does the AI Act apply to?

The AI Act will apply to those that intend to place high-risk AI systems on the market or put them into service in the EU – this is regardless of the operators re based in the EU or a third country.

More specifically, the AI Act distinguishes between:

Providers of AI systems or general-purpose models

Legal or psychical person who develops or offers AI systems or general-purpose AI models on the European market.

Deployers of AI systems

Legal or physical person who uses AI systems in a professional setting

Importers of AI systems

Operator who imports AI system offered by organizations outside the EU

Distributers of AI systems

Other end-providers and importers who make AI systems available in the EU

Authorised representatives

Legal or physical person who has gotten and accepted a written mandate from a provider of an AI system or an AI model

Also, the scope of the EU’s AI Act is also extraterritorial, meaning that it applies to organizations outside the EU. This includes, for instance, third country providers who use the output from the AI system within the EU.

Regarding the scope of the AI Act some entities are excluded from the regulation. These are:

- National security

- Military and defense

- Research & Development

- Open-source software (partially).

When do you need to comply with the AI Act?

The European Commission has announced different deadlines for when organizations need to comply with and implement the EU’s AI Act.

The deadlines are:

- February 2, 2025, for prohibited AI

- August 2, 2025, for General-Purpose AI (GPAI)

- August 2, 2026, for high-risk AI systems (under Annex III) and the rest of the AI Act

- August 2, 2027, for high-risk AI used for safety components (under Annex I)

So, as opposed to the EU implementation of GDPR, the effective date of the regulation will happen over time depending on the type of AI System or GPAI.

These ongoing deadlines are, from our point of view, a helping hand to organizations:

We don’t have to guess how we should prioritize compliance with each risk level – the EU has already made the prioritization clear and realistic to us. Also, it gives us the chance to prepare, learn, and adapt ongoing.

Finally, the EU has an obligation regarding the code of practice, which:

- Needs to be ready 9 months after the adoption of the AI Act

- Shall support compliance with the AI Act

- Will be made by the AI Office.

What are the penalties?

- Up to 7% of global annual turnover or €35 million for breaking the rules regarding prohibited AI systems

- Up to 3% of global annual turnover or €15 million for other violations.

- Up to 1.5% of global annual turnover or €7.5 million for giving incorrect info to notified bodies and national competent authorities.

Note that when fines are calculated it should be taken into account if the company is an SME or startup.

Get our fact sheet on the AI Act

Do you want to grasp the AI Act in just five minutes?

Who can 'raise the alarm'?

- European AI Office and AI Board are established to ensure a proper implementation of the rules

- National authorities will enforce the AI Act, at a member state level.

- Any individual will be able to make complaints about non-compliance with the AI Act.

Why do you need to get ready for the AI Act now?

Now that we’ve covered all the basics, we also know that we need to address the elephant in the room:

Why should you prepare for a regulation that we haven’t seen the final version of?

There are two reasons for this.

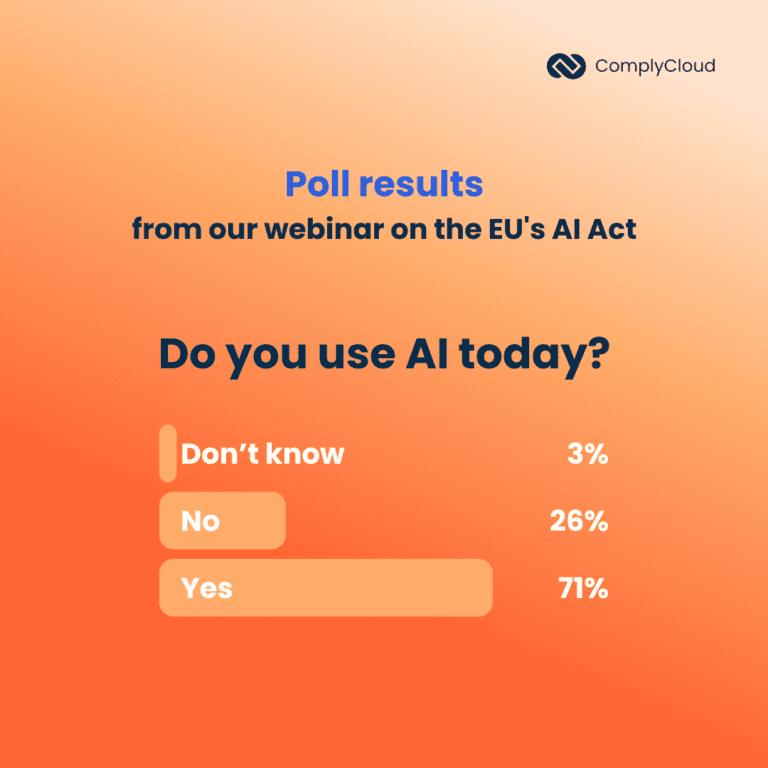

#Reason 1: Most companies already use AI tools

We know that AI tools have already become popular and are being extensively used by businesses today. We also got that confirmed at our first ComplyCloud webinar on the EU’s AI Act in February 2024, asking our 263 attendees if they use AI today. 71% said yes.

So, preparing for the AI Act is simply the right and responsible thing to do – also from an economical point of view. It’s worth considering the AI tools you plan to implement now – it could be a waste of time and money if they, in the AI Act, will become illegal or come with a high risk.

#Reason 2: We know the GDPR obligations

There’s a crossfield between the GDPR and the AI Act since mapping and assessing AI systems is already part of your GDPR obligations. We’ve already seen this in practice as the Danish Data Protection Agency has responded to two requests. One from a municipality and one from a private company who wanted to use AI tools to process personal data.

From our point of view, these requests and how the DPA has responded to these is a strong indicator on the awareness that already exists on the use of AI tools despite the lacking regulation.

Last, but not least: Words of wisdom from our CEO

Artificial intelligence and the EU’s AI Act quickly became centre of attention. However, before we get ‘carried away’, we should all reflect on the meaning of ‘transparency’ in a broader context than technical transparency.

That’s what our CEO Martin Folke Vasehus has done in this blog article – and we suggest that you take a deep dive into his words of wisdom.

Dive into our CEO article on the AI Act

Do you also think that legislation and transparency should go hand in hand?